When Google launched Nano Banana 2, it didn't just drop another AI model—it started a tiny creative revolution. I remember the first demo (late one night, too much coffee): the images kept the same face across scenes, again and again. That consistency, so rare until then, made designers and indie filmmakers sit up and take notice. Suddenly, anyone could sketch a character and see a convincing, photorealistic version within minutes. Wild, right?

Its standout feature is remarkable consistency. When other AI image generation products tried to generate the same character in many different scenes, they often ran aground. Nano Banana cracked the code. It holds together expression, lighting, and overall style regardless of pose, background, or outfit worn by the model. Whether in close-up portraits or complex scenes, it maintains the character’s features flawlessly.

The leap forward in creativity also sparked intense debates. When AI can effortlessly replicate the essence of human-made art, the question inevitably arises: where does genuine creativity stop, and imitation begin?

Yet, with this leap in creative power came a wave of debate. When AI can flawlessly replicate the essence of human-made art, the question naturally arises: where does genuine creativity stop, and imitation begin?

Google Mixboard showed up alongside Nano Banana and felt like a jam session for creators. It’s not just software—it’s a messily brilliant shared canvas where you can mash up a Nano Banana portrait, throw in a beat you hummed on the subway, and stitch in someone else’s video clip. The result? A weird, wonderful remix that sometimes clicks—and sometimes doesn’t. That unpredictability is part of the fun.

For creators, this opens unprecedented possibilities. But it also raises new questions about originality, allowing you to design a full campaign, soundtrack, and visual identity from scratch with just a few prompts. But as with every revolution, there’s a flip side.

When AI models like Google Nano Banana AI are trained on huge datasets—including existing art, sounds, and images—they blur the boundary between original work and derivative creation. The remix culture that Mixboard celebrates has reignited an old debate: Can something trained on billions of data points ever be truly original?

Indeed, ownership has become the most troublesome issue for Nano Banana. Who gets credit for an AI-generated image—the user who requested it or the company that trained the model?

Google’s current policy, which prioritizes protecting the majority’s rights, should be reconsidered. But beneath the surface lies a bigger question: If Google’s Nano Banana Pro learns from real human artists—those who are paid, trained, and followed by students—shouldn’t the profits also be shared with the artists themselves?

This is the same situation as the early days of music, where DJs would cut and mix old songs before copyright law could stop them. Today this debate is back, only this time AI itself does remixing.

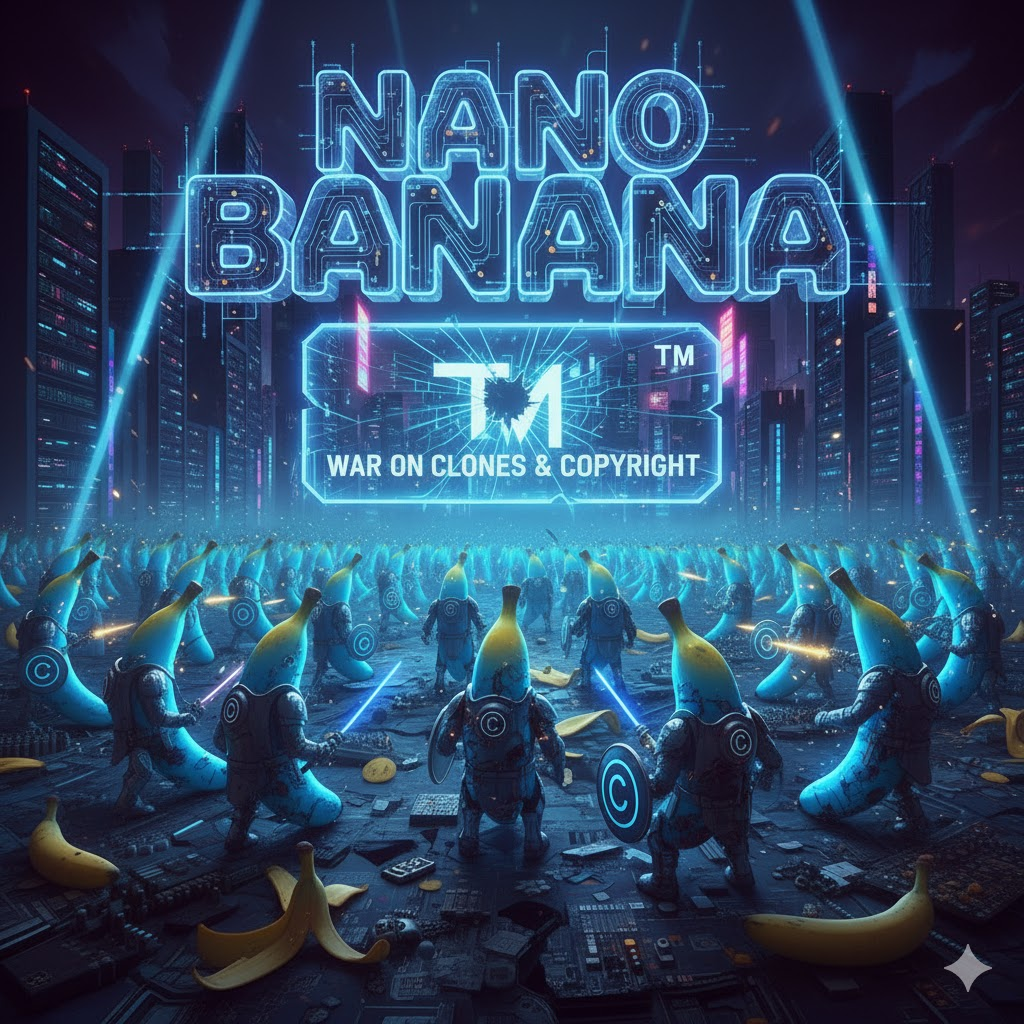

The “clone effect” has become impossible for Nano Banana to ignore. This technology, which can generate stunningly realistic digital copies of people, brands, and even popular fictional figures, has both fascinated and deeply worried everyone online.

While many users just play around, creating harmless virtual lookalikes, others are stepping over ethical boundaries. Some users are using the technology to produce deepfake content that severely infringes on people’s privacy and copyright. The problem is that even the tiniest, most subtle reproductions can cause significant real-world damage, from straight-up impersonation to serious harm to someone's reputation.

To be fair, Google has really tried to keep things transparent with the Nano Banana launch. They’ve added features like invisible watermarking, included traceable data in the files, and slapped on tougher usage rules for both Google Mixboard and the Google Nano Banana Pro itself.

Despite all that effort, enforcement is still a massive struggle. AI-generated content spreads at lightning speed, and once it hits the internet, retrieving it is nearly impossible. Even when the usage rules are perfectly clear, creators sometimes just accidentally step over a legal or ethical line.

Even with those huge challenges, Google’s strategy shows they're genuinely dedicated to making AI-fueled creativity something sustainable, not just total chaos. They are not building Nano Banana to replace artists; they are building it to help them do more.

Regardless of all the legal arguments and ethical fights, the bottom line is that Google Nano Banana AI and Google Mixboard have opened up brand new doors for independent creators all over the world.

A solo artist can now visualize characters, design album covers, and compose ambient soundtracks, all from a single laptop. A filmmaker can storyboard an entire movie using Nano Banana in a matter of days instead of months.

The future isn't actually about AI taking over creativity; it’s about making what humans can imagine even bigger. The real breakthroughs happen when people and algorithms team up, blending emotion with efficiency in ways we could never have dreamed of before.

As lawmakers desperately rush to set rules for generative AI, one truth has become absolutely obvious: creativity is permanently changed. We're likely to see future copyright laws change, drawing a hard line between work that was AI-assisted and work that was fully AI-generated. The goal is simple: reward human input and discourage pure imitation.

If managed correctly, Nano Banana could lead the way in this new age. They could set the gold standard for how to ethically train data, use consent-based datasets, and give proper, transparent credit for creative work.

The real challenge artists face is just figuring out how to use this powerful tech without losing their personal creative process. As for tech companies like Google, their mission needs to be making sure that innovation is always matched with integrity.

At the end of the day, the whole conversation around Nano Banana, Google Mixboard isn't about tech at all—it just comes down to trust.

We’re now living in a world where anyone can instantly create anything. That power can either inspire people or completely mislead them, and it all depends on who has control of it. The fight against digital clones and copyright infringement won't just define the future of AI art; it'll define the very culture of creativity itself.

At the end of the day, the biggest change will not be the algorithm; it will be us.

If we choose responsibility over shortcuts, these tools can amplify real craft. If not, we’ll end up with polished nonsense. I’d rather bet on people than on perfect code. Don’t you?